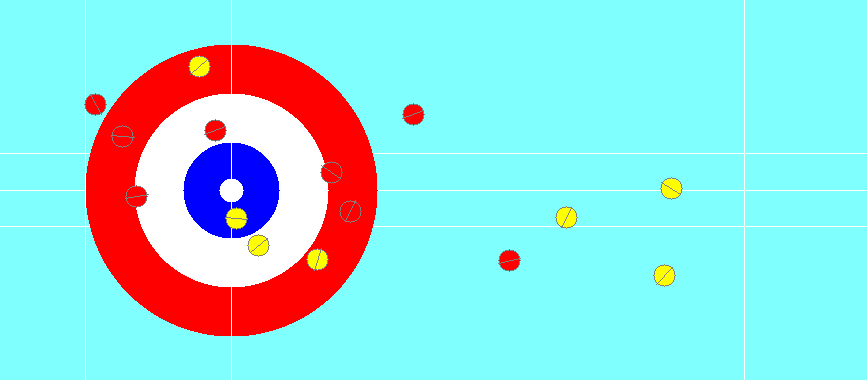

Lessons Learned from AI Curling

After 6 months of working on AI curling - attempting to achieve superhuman level performance in a simulated curling environment (Figure 1) - I've reached the time where the best next step would be to restart and rewrite the whole thing using Jax/Mojo. I've come a long way since the project's inception and when you can see only a few peaks in advance, you don't bring swimming trunks to the desert.

Some side projects are also starting to demand more attention so for now, I put down the stones, broom and shoes and move on to the next challenge. 6 months seems like a long time to spend with no concrete deliverable (except reproducing (Maeno, 2013) with a simulated curling environment). Fortunately, what I've learned more than makes up for the absence of superhuman AI curlers.

The most important lesson from this project applies to almost every project I'll ever do in the future: how to start. First, I need an idea - usually a problem or an experiment. I have a list of these written down waiting for spare time and experience to reject them or take them on. Then comes a plan done with pen and paper away from the keyboard. "Weeks of programming can save hours of planning." Followed by git init. This is not the first project that has been plagued with the absence of a full version history and in the same way I used to lose homework by not pressing Ctrl+s frequently enough, I have lost enough time reprogramming after deleting code and then deciding I need it again.

There are many other general lessons I learned but I want to share more of the intuitions that I have developed as you can learn most of what I would have written by doing a few projects and realising "this doesn't work for me". There's no journal for failed experiments so lots of things that seem obvious but no one's tried probably have been tried but failed. This isn't always the case but I do a lot of experiments I don't expect to work because I want to know why they don't work.

During this project, the problem gradually transitioned from curling to continuous action spaces and I spent a lot of time with Mujoco (Todorov et al., 2012) because while I managed a ~100x speedup from my original curling environment, the simulation speed was the main limitation for the research. Mujoco environments and two-player continuous games are very different but lots of what you learn in one can be applied in the other.

I don't want this to turn into "I tried X and it didn't work because of Y. Then I tried Z..." so I'm just going to focus on the intuitions I built up rather than the process that got me there. Maybe that reveals the most important part and leaves little evidence for you to trust what I say but just look at the GitHub code and see what the commits are (from 10% of the way in). Maybe in the future, I'll even add comments to my code in case other people read the earlier versions.

Starting in approximately chronological order, getting neural networks to approximate a continuous probability distribution by sampling is hard. The way this was defined originally is through a function \(f_\theta: \mathbb R^d \times S \rightarrow \mathbb R^3\), where \(z \sim \mathcal N(0, I_d)\) is sampled from a diagonal multivariate normal distribution. \(s \in S\) is an element of the sample space and \(f_\theta(z, s)\) the "curling vector" consists of initial velocity, angle and curl. Sampling \(z\) is easy and ideally, we can then use \(f_\theta(z, s)\) as candidate stone throws.

This looks a lot like a conditional image generation task. Image generation may seem like a disproof by counterexample to the difficulty of the problem but GANs (Goodfellow et al., 2014) and diffusion models (Ho et al., 2020) try to model the space rather than the distribution. The images do not maximise a reward function (subtly for GANs, this is a loss function rather than a reward function). Without overdoing the comparison to image generation models, which solve a different problem, assigning probabilities (or a PDF) to a sample and determining the rest of the distribution from a few samples is where the main problem lies.

The way to get around this is to approximate the distribution. For curling, we can realistically fix the action space so the agent makes "reasonable throws" that land in a legal area or could clear other stones. But even so, what should this distribution look like?

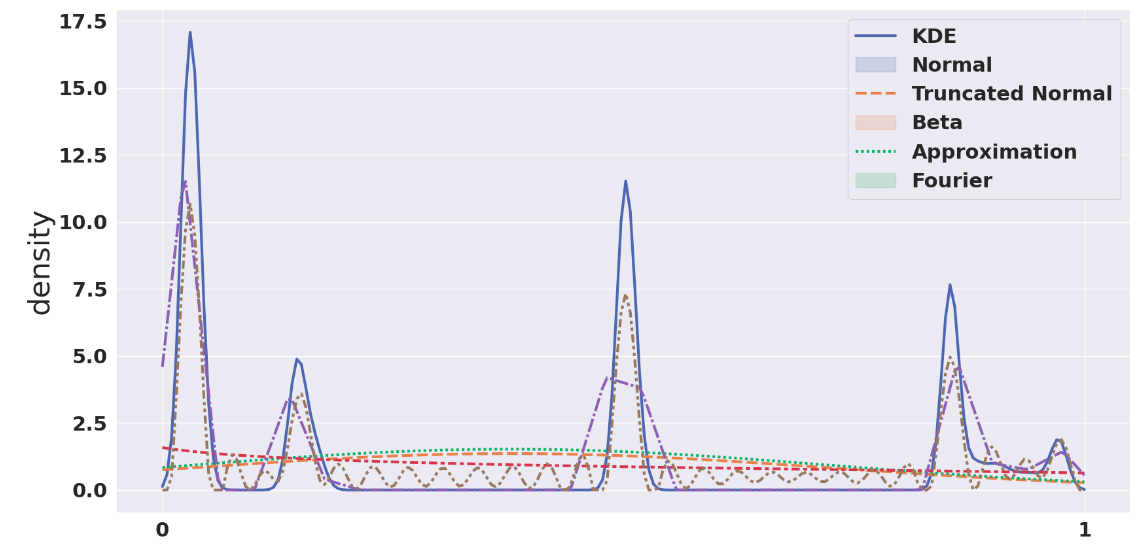

I tried many ways of approximating this distribution (Figure 2). First a normal distribution with clipping. The bell curve may be a general-purpose tool and appear in many places but approximating arbitrary distributions is not its forte. Even if we truncate, that doesn't help much either. What about a beta distribution, as suggested in (Moerland et al., 2018)? Again, this is not the way forwards. A beta distribution has a purpose too but it isn't a general-purpose tool. Additionally, the distribution will diverge if \(\alpha > 1\) or \(\beta > 1\), which gives NaNs during training (an RL nightmare). What about approximating the distribution using the Fourier Series of the PDF? This seems like a great idea and does a decent job of approximating the distribution. As intelligent as this seems, it's very difficult to learn the coefficients and you wouldn't train an image model by showing it the raw JPEG values. It is like using an electric drill to put a nail in a wall: what you need is a hammer. Just approximating the coefficients works best. And with a softmax layer, you can make sure your CDF values sum to 1 and calculate the PDF from that. For me, this feels like an "ugly" solution but so does "more data, more compute" (Sutton, 2019).

If you approximate the policy with this distribution, the next problem is how to approximate the value. The natural way of approaching it, where you use a linear activation in the last layer of an MLP, results in a bias that's the mean of the data and weakly correlated noise from the weights.

Features have meanings and trying to get them to correlate well (correlation is a linear association) with the value function is incredibly hard. Instead, I implemented a variant of the value support from (Schrittwieser et al., 2019):

For value and reward prediction we scale targets using an invertible transform \(h(x) = sign(x)(\sqrt{|x| + 1} - 1 + \varepsilon x)\), where \(\varepsilon = 0.001\). We then apply a transformation \(\varphi\) to the scalar reward and value targets in order to obtain equivalent categorical representations. ... Under this transformation, each scalar is represented as the linear combination of its two adjacent supports, such that the original value can be recovered by \(x = x_{low} \times p_{low} + x_{high} \times p_{high}\). As an example, a target of \(3.7\) would be represented as a weight of \(0.3\) on the support for \(3\) and a weight of \(0.7\) on the support for \(4\). The value and reward outputs of the network are also modeled using a softmax. During inference the actual value and rewards are obtained by first computing their expected value under their respective softmax distribution and subsequently by inverting the scaling transformation. Scaling and transformation of the value and reward happens transparently on the network side and is not visible to the rest of the algorithm.\(\)

This was a huge improvement. I ran some separate experiments on other datasets and you obtain similar improvements with a 2-layer value support compared to a 2-layer MLP on a vanilla regression task. Changing the problem from approximating \(f(x)\) to approximating \(P(f(x) \approx n)\) is much easier.

The improvement to end with is one that transformed the project. My earliest approaches could hardly beat a random player but Monte-Carlo Tree Search still feels like the most impressive creation in the field of reinforcement learning. Using full random rollouts worked very well with an almost uniform policy and this became my baseline, which took a very long time to beat. The first major reason for this is that you generate a counterfactual on each node. Now you can evaluate the strength of your policy relative to other decisions you could have made at that point. Without search, all you can do is approximate and update the policy based on this so having additional data for the same state is incredibly valuable.

The second idea that makes MCTS so powerful, an intuition I built up independently but other people have had (Huszar, 2017), is that an MCTS is a policy improvement operator. An MCTS converges to an optimal policy (under some constraints) (Kocsis et al., 2006), so if you run your policy with MCTS, the resulting policy is very likely to be better. You just keep repeating this until the MCTS returns the same policy - reaching the Nash Equilibrium - and that means you've found the optimal policy. Although in practice, you'd probably stop earlier.

AI curling has taught me a lot about a lot and there isn't a substitute for just dedicating time to projects. It hasn't been easy, but this is what has made it so enjoyable. It is my largest and hardest solo project to date and hence the one I've learned the most from. As I move forward, armed with much more experience, I can only look forward to the next challenge.

Bibliography

J.Achiam. Proximal policy optimization. Proximal Policy Optimization - Spinning Up documentation, 2020.

I.Goodfellow, J.Pouget-Abadie, M.Mirza, B.Xu, D.Warde-Farley, S.Ozair, A.Courville, Y.Bengio. Generative Adversarial Networks. CoRR, 2014.

J.Czech, P.Korus, K.Kersting. Monte-Carlo Graph Search for AlphaZero. arXiv preprint arXiv:2012.11045, 2020.

T.Moerland, J.Broekens, A.Plaat, C.Jonker. A0C: Alpha Zero in Continuous Action Space. CoRR, 2018.

D.Silver, J.Schrittwieser, K.Simonyan, I.Antonoglou, A.Huang, A.Guez, T.Hubert, L.Baker, M.Lai, A.Bolton et al.. Mastering the game of Go without human knowledge. Nature, 2017.

D.Silver, T.Hubert, J.Schrittwieser, I.Antonoglou, M.Lai, A.Guez, M.Lanctot, L.Sifre, D.Kumaran, T.Graepel et al.. Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. arXiv preprint arXiv:1712.01815, 2017.

S.Thakoor, S.Nair, M.Jhunjhunwala. Learning to play othello without human knowledge. GitHub, 2016.

R.Sutton. The Bitter Lesson. The Bitter Lesson, 2019.

M.Nomura, S.Watanabe, Y.Akimoto, Y.Ozaki, M.Onishi. Warm Starting {CMA-ES} for Hyperparameter Optimization. arXiv preprint arXiv:2012.06932, 2020.

J.Ho, A.Jain, P.Abbeel. Denoising Diffusion Probabilistic Models. CoRR, 2020.

M.Janner, Y.Du, J.Tenenbaum, S.Levine. Planning with Diffusion for Flexible Behavior Synthesis. CoRR, 2022.

S.Huang, R.Dossa, A.Raffin, A.Kanervisto, W.Wang. The 37 Implementation Details of Proximal Policy Optimization. ICLR Blog Track, 2022.

F.Huszar. AlphaGo Zero: Minimal policy improvement, expectation propagation and other connections. inFERENCe, 2017.

T.Yee, V.Lisy, M.Bowling. Monte Carlo Tree Search in Continuous Action Spaces with Execution Uncertainty. Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, 2016.

D.Silver. RL Course by David Silver - Lecture 4: Model-Free Prediction. YouTube, 2015.

E.Todorov, T.Erez, Y.Tassa. MuJoCo: A physics engine for model-based control. 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2012.

J.Schrittwieser, I.Antonoglou, T.Hubert, K.Simonyan, L.Sifre, S.Schmitt, A.Guez, E.Lockhart, D.Hassabis, T.Graepel et al.. Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model. arXiv preprint arXiv:1911.08265, 2019.

N.Maeno. Dynamics and curl ratio of a curling stone. Sports Engineering, 2013.

L.Kocsis, C.Szepesvári. Bandit Based Monte-Carlo Planning. Lecture Notes in Computer Science, 2006.

W.Federation. THE RULES OF CURLING and Rules of Competition. World Curling Federation, 2022.

A.Raffin, A.Hill, A.Gleave, A.Kanervisto, M.Ernestus, N.Dormann. Stable-Baselines3: Reliable Reinforcement Learning Implementations. Journal of Machine Learning Research, 2021.

J.Lee, W.Jeon, G.-H.Kim, K.-E.Kim. Monte-Carlo Tree Search in Continuous Action Spaces with Value Gradients. Proceedings of the {AAAI} Conference on Artificial Intelligence, 2020.

Code

The code is available on GitHub: https://github.com/George-Ogden/betacurl