BERT Embedding Math

Introduction

The embeddings of the Bidirectional Encoder Representations from Transformers (BERT) model (Devlin et al., 2018) are not fully understood. These embeddings capture the semantic properties of words, which are a fundamental part of natural language processing (NLP). By converting a discrete set of tokens into a continuous space, information about the words is condensed and the huge vocabulary is represented by a smaller dimension space. These make up the first layer of the model, which we investigate here.

Background

BERT has been a key turning point in the field of natural language processing (NLP), yet despite its release in 2018, there is still much to be learned about the model and its key architectural component, the transformer (Vaswani et al., 2017). BERT's embedding layer is responsible for converting discrete tokens into a continuous vector space that is significantly smaller than the vocabulary. This vector space is found to contain semantic information about words, but not in the linear way that we would expect.

The additive property of embeddings has been observed in unsupervised embeddings (Pennington et al., 2014) even before the advent of transformer-based models like BERT. As words, especially nouns, can be viewed as a set of semantic properties, modifying these embeddings can alter the way in which the word is interpreted by the model.

Experiments

Countries and Cities

Countries and cities have unambiguous semantics representing location, which makes them

an

ideal starting point. Looking at the clustering of the cities (Figure 1), we can see

that cities from the same country cluster together, which we expect is due to a common set of

semantics along a few dimensions that represent the country.

Next, we try to change the location of Paris, the same example used in the Rome

Paper (Meng et al., 2022), which aims to modify factual embeddings in GPT. We modify the embedding of Rome to

become

$$\text{Rome} - \text{Italy} + \text{France}$$ As a result, the predicted token for "Rome is the

capital

of [MASK]." changes from France to Italy (Figure 2).

Sentence: Rome is the capital of [MASK].

Modification: \(\mathbf{\text{Rome}}

=

\text{Rome} - \text{Italy} + \text{France}\)

Predicted label: France

Confidence:

92.9%

Sentence: Pakistan is the south of [MASK].

Modification: \(\mathbf{\text{Pakistan}} = \text{Pakistan} - \text{Asia} + \mathbf{\text{Continent}}\)

| Continent | Prediction | Confidence |

|---|---|---|

| Asia | Afghanistan | 37.8% |

| Africa | Angola | 4.8% |

| Europe | France | 4.0% |

| Oceania | Paris | 1.2% |

The embedding maths also works on a country-continent scale (Figure 3). The results are not as clear as when changing the location of cities, but Rome has a semantically stronger relation to Italy than Pakistan does to Asia.

Colour

BERT (Devlin et al., 2018) was pretrained only on text, so does not have any visual information about

the world. As a

result, we see a phenomenon similar to The Black Sheep Problem (Daumé, 2016) where language models

assign a disproportionality high probability to a sheep being black. This is because sheep are

generally

white and this assumption leads to a much higher frequency of the phrase "black sheep" than "white

sheep" in the training corpus.

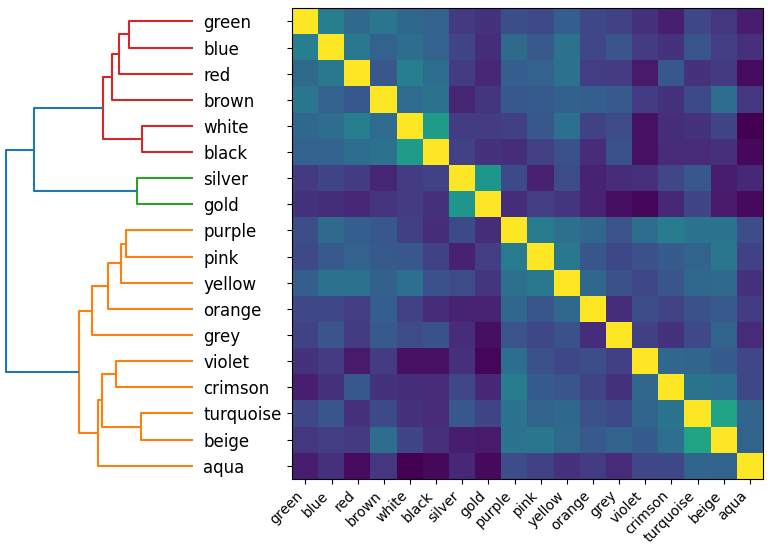

The embeddings for colours (Figure 4) display that the similarity (cosine similarity) of colours has very little to do with their visual similarity and a lot more to do with the way they are used in sentences. Clear examples of this are the white-black pairing and silver-gold cluster. Visually, silver is more like grey and gold like yellow than they are to each other but BERT shows no concept of this in its embeddings.

Addition

We attempt to see if the "word offset technique", the idea that performing simple algebraic operations on word vectors is valid linguistically, conjectured in (Bengio, 2009) holds. As a result, we would expect that

\(\text{queen} - \text{woman} + \text{man} \approx \text{king}\)

using the vector representations of the words. While we have seen there is strong semantic information in the embeddings, it is not strong enough for us to achieve

\(\text{king} - \text{man} + \text{woman} \approx \text{queen}\)

The result here is an embedding that is still very close to "king".

\(\text{king} - \text{man} + \text{woman} \approx \text{king}\)

And likewise,

\(\text{queen} - \text{woman} + \text{man} \approx \text{queen}\)

Gender

The training data from BERT was BookCorpus (Zhu et al., 2015) and English Wikipedia, which

could

be considered fairly neutral sources of information, however, there are clear biases in BERT,

including

gender.

Sentence: Pronoun works as a [MASK].

\(\text{He}\)

\(\text{She}\)

\(\frac{\text{He} + \text{She}}{2}\)

Lawyer17.5%

Teacher16.2%

Lawyer18.7%

Farmer9.3%

Model13.5%

Teacher10.9%

Teacher9.1%

Journalist9.1%

Journalist8.7%

Jurnalist7.9%

Lawyer8.4%

Farmer5.7%

Businessman5.4%

Nurse7.2%

Businessman3.9%

The approach of averaging the embeddings (Figure 5) reduces biases in this example and functions as a more gender-neutral pronoun. It also illustrates that the embedding layer has an important role to play in the presence of biases in language models, due to incorrect semantic properties that have been added to each pronoun during training.

Limitations

BERT marked a fundamental change in the field of NLP, however, it has now been superseded by many other language models and is no longer the state of the art. Unfortunately, reviewing other language models with these techniques is impossible when these models are not open source (OpenAI, 2023); do not perform masked language modelling (Raffel et al., 2020), which we use in this evaluation; or have tokens that represent very few characters (Liu et al., 2019).

Additionally, RoBERTa discovers that while BERT introduced the idea of pretraining, it was significantly undertrained and BERT's pretraining contained a next-sentence prediction task, which hurt performance. As a result, the embedding matrix is not as well-formed as it could be and there are many biases present in BERT as these help it take shortcuts during prediction (Liu et al., 2019).

Conclusions

Embeddings in BERT contain lots of structure and semantic information, which we can exploit by performing embedding arithmetic. However, this structure has a loose additive property that we cannot use for search but can use for modifying embeddings and the model will process these in a consistent way. We also observe a lack of real-world information contained in the model, however, newer multimodal approaches are addressing this issue.

Bibliography

Y.Bengio. Learning Deep Architectures for AI. Found. Trends Mach. Learn., 2009.

J.Devlin, Ming-WeiChang, K.Lee, K.Toutanova. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv preprint arXiv:1810.04805, 2018.

H.Daumé. Language bias and black sheep. natural language processing blog, 2016.

Y.Zhu, R.Kiros, R.Zemel, R.Salakhutdinov, R.Urtasun, A.Torralba, S.Fidler. Aligning Books and Movies: Towards Story-Like Visual Explanations by Watching Movies and Reading Books. The IEEE International Conference on Computer Vision (ICCV), 2015.

J.Pennington, R.Socher, C.Manning. GloVe: Global Vectors for Word Representation. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2014.

OpenAI. GPT-4 Technical Report. arXiv preprint arXiv:2303.08774, 2023.

Y.Liu, M.Ott, N.Goyal, J.Du, M.Joshi, D.Chen, O.Levy, M.Lewis, L.Zettlemoyer, V.Stoyanov. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv preprint arXiv:1907.11692, 2019.

K.Meng, D.Bau, A.Andonian, Y.Belinkov. Locating and Editing Factual Associations in GPT. Advances in Neural Information Processing Systems, 2022.

C.Raffel, N.Shazeer, A.Roberts, K.Lee, S.Narang, M.Matena, Y.Zhou, W.Li, P.Liu. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. Journal of Machine Learning Research, 2020.

A.Vaswani, N.Shazeer, N.Parmar, J.Uszkoreit, L.Jones, A.Gomez, Ł.Kaiser, I.Polosukhin. Attention is All you Need. Advances in Neural Information Processing Systems, 2017.

T.Cai, R.Ma. Theoretical Foundations of t-SNE for Visualizing High-Dimensional Clustered Data. arXiv preprint arXiv:2105.07536, 2022.

Code

The code is available on GitHub: https://github.com/George-Ogden/embeddings